Security Models of Language Models

Security Models of Language Models

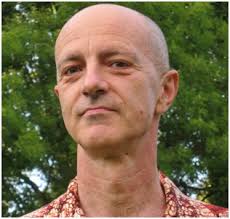

Dusko Pavlovich, University of Hawaii

The logic of attention enables Large Language Models (LLMs) to learn not only the languages but also the protocols sampled in their training corpuses. That is how their chatbots produce not just the well-formed sentences but also the well-mannered conversations.

As effectively trainable speakers and protocol participants, Large Language Models are an effective tool of influence and manipulation, opening an alley to a new family of psychological operations. This concrete threat, already rising behind the horizon, embodies the strategic situation discussed in the C3E presentation at Keystone, CO in 2011, and presented at the New Security Paradigms Workshop the same year. The scientific approach proposed there, through games of incomplete information and one-way algorithmics, has in the meantime become urgently needed and practically realizable.

Dusko Pavlovich studied mathematics in Utrecht, worked at McGill and Imperial College London, before leaving academia in 1999 to work at Kestrel Institute. He returned to the academia incrementally, first as a Visiting Professor at Oxford 2007-2012, as a Professor of Information Security at Royal Holloway 2010-2014, and as a Professor of Computer Science (by courtesy of Mathematics) at University of Hawaii at Manoa since 2014. In 2021-22, he also held the Excellence Professorship at Radboud University. Dusko's publications cover a wide area of research interests, from mathematics and quantum information, through theoretical computer science and software engineering, to the main focus on security. The textbook “Programs as diagrams”, providing a shortcut to the prerequisites for teaching security science, recently appeared in Springer-Nature’s flagship series on Theory and Applications of Computability. His current studies of AI safety and security are described in the draft book on “Language processing in humans and computers”, posted at https://arxiv.org/abs/2405.14233