Cybersecurity Snapshots #39 - Exascale Supercomputers

Cybersecurity Snapshots #39 -

Exascale Supercomputers

Supercomputers are used to model and simulate complex, dynamic systems that would be too expensive, impractical, or impossible to demonstrate physically. Supercomputers have changed the way scientists explore the evolution of our universe, biological systems, weather forecasting, and even renewable energy. A new type of supercomputer is now being used called exascale supercomputers. Exascale supercomputers are allowing scientists to simulate better the complex processes involved in stockpile stewardship, medicine, biotechnology, advanced manufacturing, energy, material design, and the universe's physics more quickly and with higher definition.

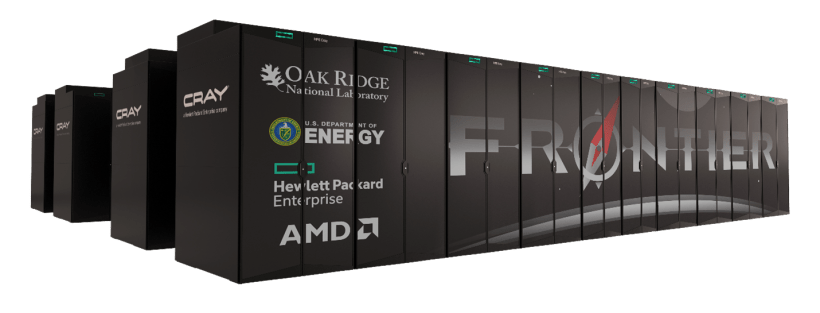

In 2018 the Oak Ridge National Laboratory unveiled Summit as the world's most powerful and smartest scientific supercomputer, with a peak performance of 200,000 trillion calculations per second or 200 petaflops. In 2018, Summit was eight times more powerful than America's top-ranked system at the time. In 2022, Oak Ridge National Laboratory unveiled the first declared exascale computer, Frontier. Frontier has a peak performance of 2 quintillion calculations per second or 2 exaflops. Frontier will soon have better competition from incoming exascale supercomputers such as El Capitan, housed at Lawrence Livermore National Laboratory, and Aurora, which will reside at Argonne National Laboratory.

The new exascale supercomputers are projects of the Department of Energy (DOE) and its National Nuclear Security Administration (NNSA).The DOE oversees these labs and a network of others across the country. NNSA is tasked with keeping watch over the nuclear weapons stockpile, and one of exascale computing's reasons for being is to run calculations that help maintain that arsenal. When scientists are finished commissioning Frontier, the DOE stated that it would be dedicated to fundamental research. The DOE hopes to illuminate core truths in various fields, such as learning about how energy is produced, how elements are made, and how the dark parts of the universe spur its evolution, all through almost-true-to-life simulations in ways that wouldn't have been possible even with supercomputers of a few years ago.

Frontier is made up of nearly 10,000 Central Processing Units (CPUs), which perform instructions for the computer and are generally made of integrated circuits and almost 38,000 Graphics Processing Units (GPUs) GPUs. GPUs were originally created to quickly and smoothly display visual content in gaming. But they have been reappropriated for scientific computing, in part because they're good at processing information in parallel. The DOE noted that the two kinds of processors inside Frontier are linked. The GPUs do repetitive algebraic math in parallel, which frees the CPUs to direct tasks faster and more efficiently. The DOE noted that by breaking scientific problems into a billion or more tiny pieces, Frontier allows its processors to each eat their own small bite of the problem. The DOE stated that the 9,472 different nodes in the supercomputer are also all connected in such a way that they can pass information quickly from one place to another. The DOE noted that, importantly, Frontier doesn't just run faster than machines of the past. It also has more memory. This allows Frontier to run more extensive simulations and hold tons of information in the same place it's processing the data.

The DOE stated that with its power, Frontier could teach humans things about the world that might have remained opaque before. In meteorology, it could make hurricane forecasts clearer. In chemistry, it could experiment with different molecular configurations to see which might make great superconductors or pharmaceutical compounds. And in medicine, it has already analyzed all the genetic mutations of SARS-CoV-2, the virus that causes COVID. It was able to cut the time that calculation took from a week to a day and allowed scientists to understand how these mutations affect the virus's contagiousness.

Douglas Kothe, associate laboratory director of computing and computational sciences at Oak Ridge, stated that, in principle, the community could have developed and deployed an exascale supercomputer much sooner, but it would not have been usable, useful, and affordable by their standards. Kothe noted that obstacles such as huge-scale parallel processing, energy consumption, reliability, memory, storage, and lack of software to start running on such supercomputers stood in the way of those standards. Years of focused work with the high-performance computing industry lowered those barriers to finally satisfy scientists. Frontier's upgraded hardware is the main factor behind its improvements, but hardware alone doesn't do scientists that much good if they don't have software that can harness the machine's new power. That's why an initiative called the Exascale Computing Project (ECP), which brings together the DOE, NNSA, and industry partners, have sponsored 24 initial science-coding projects alongside the supercomputers' development. Frontier can process seven times faster and hold four times more information in memory than its predecessors. In the future, we can look forward to seeing the new advancements and knowledge that exascale supercomputers will allow us to achieve.