Nazli Choucri, Professor of Political Science at MIT, is Senior Faculty at the Center of International Studies (CIS), and Faculty Affiliate, Institute for Data, Science, and Society (IDSS). She focuses on international relations and cyberpolitics, with special attention to sources of conflict and war, on the one hand, and strategies for security and sustainability, on the other. Professor Choucri directs the research initiatives of CyberPolitics & Policy Lab at MIT and the related knowledge networking system CyberIR@MIT—both motivated by the cyber-inclusive view of international relations and the global system developed by the MIT-Harvard Project Explorations in Cyber International Relations (ECIR), for which she served as Principal Investigator.

Dr. Choucri is Fellow of the American Association for the Advancement of Science (AAAS). She is author and/or editor of twelve books, most recently Cyberpolitics in International Relations (2012), and International Relations in the Cyber Age: The Co-Evolution Dilemma, with David. D. Clark (2019). She is the architect and Director of the Global System for Sustainable Development (GSSD), an evolving knowledge and networking system centered on sustainability problems and solution strategies, and the Founding Editor of the MIT Press Series on Global Environmental Accord.

Professor Choucri has served as General Editor of the International Political Science Review and, for two terms, on the Editorial Board of the American Political Science Review. She also served two terms as President of UNESCO's Management of Social Transformation Program. Her international research and advisory activities include collaborative work in Algeria, Canada, Colombia, Egypt, France, Germany, Greece, Honduras, Japan, Kuwait, Mexico, Pakistan, Qatar, Sudan, Switzerland, Syria, Tunisia, Turkey, United Arab Emirates and Yemen. She is a board member for Boston Global Forum (BGF), and founding member of the Artificial Intelligence World Society (AIWS).

PROJECT SYNOPSIS

Problem

Mounting concerns about the safety and security of critical infrastructure have resulted in an intricate ecosystem of cybersecurity guidelines and policies, as well as directives and compliance measures. By definition, such guidelines and policies are written in linear, sequential text form—word after word, chapter after chapter—often with different segments thereof presented in different documents in the policy ecosystem. In general, the design and description of target CPS-structures are also in text form. This situation makes it difficult to integrate or even to understand the policy-technology-security interactions. It also impedes effective risk assessment. In short, individually or collectively, these features inevitably undermine cybersecurity initiatives. Missing are fundamental policy analytics to support CPS cybersecurity and reduce barriers to policy implementation.

Goals

The overarching goal of this project is to develop analytical methods to strengthen cybersecurity policies in support of the national strategy for cybersecurity, as outlined in Presidential Executive Orders and National Defense Authorization Acts.

Operationally, the goal is to create policy analytics for cybersecurity, designed to:

- Overcome the limitations of the conventional text-based policy form,

- Extract metric-based knowledge embedded in policy guidelines and/or distributed in policy-ecosystems,

- Align policy directives to intended targets in the relevant CPS structure, and

- Assist the users on "how-to" connect cybersecurity policy to CPS properties and facilitate implementation.

Strategically, our goal is to construct a suite of tools for application to policy directives, regulations, and guidelines across diverse CPS domains and properties. The intent is to help users address mission-related challenges, concerns or contingencies.

Challenges

The research challenge is three-fold, namely to:

- Develop structured system models from text-based descriptions of system properties

- Transform policy guidelines and directives from text to metrics

- Connect Policy directives to CPS properties.

Addressing these challenges essential in order to:

- Identify major policy-relevant CPS properties and parameters,

- Situate vulnerabilities and impacts,

- Map security requirements to security objectives, and

- Support responses of CPS to targeted policy controls.

Data & Proof of Concept

Our "raw" data base consists of major policy reports on CPS cybersecurity prepared by the National Institute for Standards and Technology (NIST) as well as NIST analyses of CPS properties. Clearly, considerable efforts are always being made to "mine" NIST materials; however, few initiatives explore the potential value-added of drawing on multi-methods for knowledge extraction and/or of developing analytical tools to support user understanding of policy directives, analysis, and eventually to enable targeted-action. While our approach appreciates and is informed by such efforts, it is distinctive by developing a suite of cybersecurity policy analytics—based entirely on metricized text of policy documents—and applied to metricized models of CPS. The "proof of concept" focuses on analytics for cybersecurity policy applied to smart grid for electric power systems.

BACKGROUND & FOUNDATIONS

The research initiative on Explorations in Cyber International Relations (ECIR) provides background and foundations for this Project. A collaboration of MIT and Harvard University, with Nazli Choucri as Principal Investigator, ECIR was completed under a Minerva Project of the U.S. Department of Defense (2009–2014).

The research problem is this: distinct features of cyberspace—such as time, scope, space, permeation, ubiquity, participation and attribution—challenge traditional modes of inquiry in international relations and limit their utility. The interdisciplinary MIT-Harvard ECIR research project explores various facets of cyber international relations, including its implications for power and politics, conflict and war.

The primary mission and principal goal is to increase the capacity of the nation to address the policy challenges of the cyber domain. Our research is intended to influence today’s policy makers with the best thinking about issues and opportunities, and to train tomorrow’s policy makers to be effective in understanding choice and consequence in cyber matters.

Accordingly, the ECIR vision is to create an integrated knowledge domain of international relations in the cyber age, that is (a) multidisciplinary, theory-driven, technically and empirically; (b) clarifies threats and opportunities in cyberspace for national security, welfare, and influence; (c) provides analytical tools for understanding and managing transformation and change; and (d) attracts and educates generations of researchers, scholars, and analysts for international relations in the new cyber age.

See Final Report of the MIT–Harvard University Project on Explorations in Cyber International Relations.

See publications, reports, theses, and addendum to the ECIR final report.

PROJECT PARTICIPANTS

- Gaurav Agarwal, Alumnus, MIT (2018-2022)

- Jerome Anaya, Researcher, Political Science, MIT (2018-2019, 2022)

- Lauren Fairman, Researcher, Political Science, MIT (2019-2021)

- Allen Moulton, Research Scientist, Sociotechnical Systems Research Center (SSRC), MIT (2021)

- Saurabh Amin, Associate Professor, Civil and Environmental Engineering, MIT (2018-2019)

- James Gordon, MIT Undergraduate Research Opportunity Program - UROP (2020)

- Nechama Huba, Student, Wellesley College, Junion-Senior (2021-2023)

- Joseph Ward, MIT Undergraduate Research Opportunity Program - UROP (2021)

RESULTS, PRODUCTS & ARTIFACTS

Project results and products include, but are not limited to: (a) methods to examine the implications of cybersecurity directives and guidelines directly applicable to the system in question; (b) information about relative vulnerability pathways throughout the whole or parts of the system-network, as delineated by the guidelines documents; (c) insights from contingency investigations, that is, "what...if..."; (d) design framework for information management within the organization; and (e) ways to facilitate information flows essential for cyber security-related decision.

Results To Date

Thus far, we have aligned the project vision and mission to the priorities of the Program on National Cybersecurity Policy and identified the overall policy-relevant ecosystem. By focusing on national cybersecurity policies for securing the nation's critical infrastructure, we identified the policy ecosystem and core policy documents pertaining to smart grid for proof of concept.

We have extracted text-based data and created a metric-based Dependency Structure Matrix (DSM) of the "as-is" NIST’s reference model for Smart Grid. We also completed the design and operational strategy for our data extraction and linkage method. This involves developing the method for moving from "policy-as-text" to "text-as-data" in the process of constructing the Suite of Policy Analytics for CPS cybersecurity.

In short, we created (a) metric-models of policies and guidelines, (b) metric-models of CPS and captured (c) value-added of applying policy directives to CPS properties. Each was based on a set of pre-tests—executed in operational form—and provided foundation for the next step.

In the process, we developed rules and methods for extracting and metricizing data from text-form documents, and then constructed the necessary issue and policy-specific linked database for the relevant policy-ecosystem.

Jointly, these steps allowed us to create (i) initial exploratory tools for analysis of system information, and (ii) core dependency matrix (DSM) of the CPS based on the identification of first-level information dependencies. The DSM was (a) examined and validated, (b) further transformed as needed into clusters and partitions of structure and process, in order to (c) explore CPS properties for policy and reveal interconnections and "hidden features."

The forgoing served as the basis upon which added policy imperatives—also in text form—are incorporated in expanded DSM forms.

Throughout, we have addressed critical research tasks, notably (i) identifying and undertaking essential corrections; (ii) replicating the core structured DSM model for validation purposes (iii) extending the core DSM to span greater system structure (iv) conducting general applications of project methods and (vi) explore alternative approaches to automation of the entire research process.

Contributions to Hard Problems

Our major contribution is to the hard problem of policy governed collaboration, with secondary contributions to the other hard problems. We examine the value of "text-to-metrics" in a complex cyber-physical system where threats to operations serve as driving motivations for policy responses.

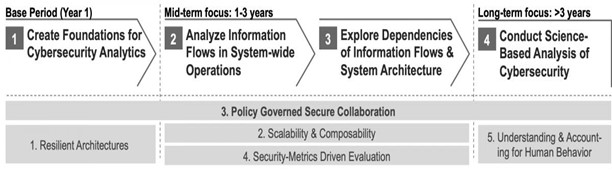

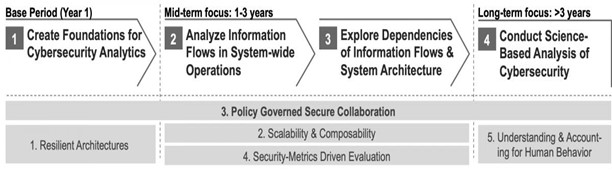

This Project directly addresses the hard problem of “policy-governed secure collaboration” with proof of concept at the enterprise level (smart grid for electrical power systems The figure below displays the near-, mid- and long- term Project goals (arrow format). The first grey bar (tope) shows “Policy Governed Secure Collaboration” as its primary hard problem across all research periods. The figure also shows where the four other hard problems (grey bars) align with and across the research phases (the arrow format).

|

| Project design—research phases in relation to SoS hard problems. |

It is especially relevant to the Science of Security & Privacy Program because the work plan is anchored in metricized policies then applied to the CPS model in order to (a) situate salient system-wide properties, (b) locate vulnerabilities (c) map security requirements to security objectives and (d) advance research on how multiple system features interact with multiple security requirements and affect the cybersecurity critical cyber-physical enterprises.

Potential Applications

Two issues were raised by NSA staff and discussed with the Project PI:

- How can the methods and techniques being development (or have developed) for Policy Analysis for Cybersecurity of Cyber-Physical Systems assist in creating an Automatic Compliance Monitoring Application? The goal would be to place on a system running data analysis applications (analytics), with the purpose of ensuring that those analytics are complying with all relevant policies and regulations.

- How can the Project research approach be used for applications to analytics of NIST Privacy Framework.

PUBLICATIONS

Publications completed under Science of Security & Privacy lablet at MIT are deposited in, and available on, DSpace@MIT.

Books

- Choucri, N., & Clark, D. D. (2019). International relations in the cyber age: The co-evolution dilemma. MIT Press.

Book Chapters

- Choucri, N. (2021). Framework for an artificial intelligence international accord. In N. A. Tuan (Ed.), Remaking the world: Toward an age of global enlightenment (pp.27-44). Boston Global Forum, United Nations Academic Impact.

- Choucri, N., & Agarwal, G. (2017). The theory of lateral pressure: Highlights of quantification and empirical analysis. In W. R. Thompson (Ed.), The Oxford Encyclopedia of Empirical International Relations Theory. Oxford University Press.

Journal Articles

- Klemas, T., Lively, R., Atkins, S., & Choucri, N. (2021). Accelerating cyber acquisitions: Introducing a time-driven approach to manage risks with less delay. The ITEA Journal of Test and Evaluation. 42, 194-202.

- Huang, K., Madnick, S., Choucri, N., & Zhang, F. (2021). A systematic framework to understand transnational governance for cybersecurity risks from digital trade. Global Policy, 1-14.

- Klemas, T., Lively, R. & Choucri, N. (2018). Cyber acquisition: Policy changes to drive innovation in response to accelerating threats in cyberspace. Proceedings of the 2018 International Conference on Cyber Conflict (CYCON U.S.), 103-120.

Conference Proceedings

- Choucri, N., & Agarwal, G. (2021). Complexity of international law for cyber operations. Proceedings of the 2021 IEEE International Symposium on Technologies for Homeland Security (HST), 1-7.

- Choucri, N., & Agarwal, G. (2019). Securing the long-chain of cyber-physical global communication infrastructure. Proceedings of the 2019 IEEE International Symposium on Technologies for Homeland Security (HST), 1-7.

- Dukakis, M., Choucri, N., Cytryn, A., Jones, A., Nguyen, T. A., Patterson, T., Reveron, D., & Silbersweig, D. (2018). The AIWS 7-layer model to build next generation democracy BGF-G7 Summit 2018. The Boston Global Forum, & Michael Dukakis Institute for Leadership and Innovation.

- Choucri, N., & Agarwal, G. (2017). Analytics for smart grid cybersecurity. Proceedings of the 2017 IEEE International Symposium on Technologies for Homeland Security (HST), 1-3.

Reports

- Choucri, N., & Anaya, J. (2024). Policy Analytics for Cybersecurity of Cyber-Physical Systems Compilation.

Working Papers

- Choucri, N., & Agarwal, G. (2022). Complexity of international law for cyber operations (Research Paper No. 2022-10). MIT Political Science Department.

- Choucri, N., Fairman, L., & Agarwal, G. (2022). CyberIR@MIT: Knowledge for science, policy, practice (Working Paper No. 2022-09). MIT Political Science Department.

- Choucri, N., & Agarwal, G. (2021). New Hard Problems in Science of Security (prepared for Symposium in the Science of Security (HotSoS). MIT Political Science Department.

- Moulton, A., Madnick, S. E., & Choucri, N. (2020). Cyberspace operations functional capability reference architecture from document text (Working Paper CISL# 2020-24). MIT Sloan School of Management.

- Dukakis, M., Vīķe-Freiberga, V., Cerf, V., Choucri, N., Lagumdzija, Z., Nguyen, T. A., Patterson, T., Pentland, A., Rotenberg, M., & Silbersweig, D. (2020). Social contract for the AI age. Artificial Intelligence World Society (AIWS), & Michael Dukakis Institute for Leadership and Innovation.

- Dukakis, M., Nguyen, T. A., Choucri, N., & Patterson, T. (2018). The concept of AI-government: Core concepts for the design of AI-government (Concept Paper). Boston Global Forum, & Michael Dukakis Institute for Leadership and Innovation.

- Choucri, N., Agarwal, G., & Koutsoukos, X. (2018). Policy-governed secure collaboration: Toward analytics for cybersecurity of cyber-physical systems. MIT Political Science Department.

Posters

- Choucri, N., Madnick S., & Agarwal G. (2018, July 16). Analytics for Cybersecurity of Cyber-Physical Systems [Conference & Poster session]. Cybersecurity at MIT Sloan Annual Conference: Answering the Question "How Secure Are We"? MIT Sloan School of Management, Cambridge, MA.

- Choucri, N., & Agarwal G. (2022). Analytics for Cybersecurity of Smart Grid: Identifying Risk and Assessing Vulnerabilities [Poster]. Cambridge, MA.

- Choucri, N., & Agarwal G. (2022). Managing Risk: Capturing Full-Value of Cybersecurity Guidelines [Poster]. MIT, Cambridge, MA.

- Choucri, N., & Agarwal G. (2022). Analytics for Enterprise Cybersecurity: Management of Smart Grid Cyber Risks & Vulnerabilities [Poster]. Cambridge, MA.

- Choucri, N., & Agarwal G. (2022). Analytics for Enterprise Cybersecurity Application Example Summary [Poster]. MIT, Cambridge, MA.

OUTREACH

Project-Related Websites

MIT CyberPolitics & Policy Lab, under contribution, includes the conducted under this research grant and related research initiatives addressing US national security and cybersecurity.

CyberIR@MIT: Knowledge for Science, Policy, Practice is a dynamic, interactive ontology-based knowledge and networking system focusing on the dynamic, diverse, and complex interconnections of cyberspace & international relations.

SoS & Other Meeting Presentations

- Choucri, N. (2021, July 13-14). Analytics of Cybersecurity Policy: Value for Artificial Intelligence? [Conference session]. Summer 2021 Quarterly Science of Security Lablet Meeting, online.

- Choucri, N. (2021, April 12-15). Special Session on Science of Security Hard Problems: Rethinking Security Measures [Conference session]. 2021 Symposium in the Science of Security (HotSoS), online.

- Choucri, N. (2020, December 2-3). The Dynamics of Cyberpolitics [Conference session]. CyberSecure 2020, online.

- Choucri N. (2020, November 12-13). The Quad Group, AIWS Social Contract and Solutions for World Peace & Security [Conference session]. Riga Conference 2020, online.

- Choucri, N. (2020, January 15-16). Application of Policy-based Methods for Risk Analysis [Conference session]. Winter 2020 Quarterly Science of Security and Privacy Lablet Meeting, Raleigh, North Carolina.

- Choucri, N. (2019, August 2). Analytics for cybersecurity of cyber-physical systems [Conference session] Networking and Information Technology Research and Development (NITRD), online.

- Choucri, N. (2019, July 9-10). Analytics for Cybersecurity of CPS—Overview and Year 1 Report [Conference session]. Summer 2019 Quarterly Science of Security and Privacy Lablet Meeting, Lawrence, Kansas, United States.

- Choucri, N. (2018, October 19-20). Bytes and Bullets: The Future of Cyber Warfare [Panel session]. New World Powers: Global Security Forum, Hartford, CT, United States.

- Choucri, N. (2018, July 31 and August 1). Panel on Transition: Panel with representatives from each lablet on ideas to make transition successful. Summer 2018 Quarterly Science of Security and Privacy Meeting, Urbana, Illinois, United States.

- Choucri, N. (2018, March 13-14). Project Kick-off [Conference session]. Science of Security Lablet Kickoff and Quarterly Meeting, College Park, MD, United States.

Other Outreach Activities

- Nazli Choucri, MIT PI, was part of organizing committee of 6th Annual Hot Topics in the Science of Security (HoTSoS) Symposium, Nashville, Tennessee, April 1-3, 2019.

- Nazli Choucri, MIT PI, participated in the 2019 Fall Science of Security and Privacy Quarterly Lablet Meeting, Chicago, Illinois, November 5-6, 2019.

MIT Courses

Cybersecurity

MIT Course Number: 17.447/17.448–DS350; Political Science & Institute for Data Science and Society, School of Engineering. Faculty: Nazli Choucri with Alexander Pentland, earlier with Stuart Madnick

Focuses on the complexity of cybersecurity in a changing world. Examines national and international aspects of overall cyber ecology. Explores sources and consequences of cyber threats and different types of damage. Considers impacts for and of various aspects of cybersecurity in diverse geostrategic, political, business and economic contexts. Addresses national and international policy responses as well as formal and informal strategies and mechanisms for responding to cyber insecurity and enhancing conditions of cybersecurity. Students taking graduate version expected to pursue subject in greater depth through reading and individual research. OCW Link

International Relations Theory in the Cyber Age

MIT Course Number: 17.445/17.446; Political Science. Faculty: Nazli Choucri

Cyberpolitics in International Relations focuses on cyberspace and its implications for private, public, sub-national, national, and international actors and entities. It focuses on legacies of the 20th-century creation of cyberspace, changes to the international system structure, and new modes of conflict and cooperation. This course examines ways in which international relations theory may accommodate cyberspace as a new venue of politics and how cyberpolitics alters traditional modes and venues for international relations. OCW Link