C3E 2018 Challenge Problem: AI/ML

AI/ML Cyber Defense

For the Computational Cybersecurity in Compromised Environments Workshop (C3E 2018), C3E will look further into Adversarial Machine Learning (ML) and connections with Explainable Artificial Intelligence (XAI), and Decision Support Vulnerabilities. Advances in machine learning are creating new opportunities to augment human perception and decision-making in large scale computing and complex networking. Recently, vulnerabilities have been identified in machine learning that can demonstrate how an attacker can poison training datasets and invert models to evade detection or disrupt classification, construct data points to be mishandled by ML algorithms, infer information about training set, and infer information about model parameters.

Background

Artificial Intelligence (AI) was founded as an academic discipline in 1956 and has spawned many research subfields such as speech recognition, machine learning and artificial neural networks. The term AI highlights the intelligence implemented in machines. Within the past decade there is much optimism for the future of AI; computing power has expanded both at the enterprise or cloud level and at the client desktop or on mobile devices. The voice assistants Apple Siri, Google Assistant, and Amazon Alexa are good examples of natural language speech recognition.

According to the White House, the Federal Government’s investment in unclassified R&D for AI and related technologies has grown by over 40% since 2015, in addition to substantial classified investments across the defense and intelligence communities.

https://www.whitehouse.gov/briefings-statements/artificial-intelligence-american-people/

Research has surged, but brings new concerns about the integrity of the data, functionality and the security of these systems. A recent study by Office of Science and Technology (OSTP) examined all aspects of AI research to assess progress in AI/ML and to develop a National Strategy to guide future research in addressing requirement and challenges.

The OSTP study states: “AI systems also have their own cybersecurity needs. AI-driven applications should implement sound cybersecurity controls to ensure integrity of data and functionality, protect privacy and confidentiality, and maintain availability. The recent Federal Cybersecurity R&D Strategic Plan highlighted the need for “sustainably secure systems development and operation.” Advances in cybersecurity will be critical in making AI solutions secure and resilient against malicious cyber activities, particularly as the volume and type of tasks conducted by governments and private sector businesses using Narrow AI increases.” https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf

Most of these security and integrity concerns apply across the gamut of cyber physical systems (CPS). During the development of the various government strategies, the issue of an adversary compromising the AI system was highlighted. AI/ML systems span all segments of cyberspace from man-in-the-loop systems to completely autonomous operations. As the capabilities of AI/ML systems increase, it is sometimes difficult for humans to understand the machine decision-making process; hence, there is concern for malicious actors to compromise these systems without human awareness. Thus is especially relevant in SCADA and Industrial Control Systems (ICS) that are components of the Cyber Physical Systems (CPS) of the National Critical Infrastructure.

This growing concern was highlighted in several areas of the “The National Artificial Intelligence Research and Development Strategy.” Specifically, the Strategy highlighted the following concerns:

“AI embedded in critical systems must be robust in order to handle accidents, but should also be secure to a wide range of intentional cyber attacks. Security engineering involves understanding the vulnerabilities of a system and the actions of actors who may be interested in attacking it. While cybersecurity R&D needs are addressed in greater detail in the NITRD Cybersecurity R&D Strategic Plan, some cybersecurity risks are specific to AI systems. For example, one key research area is “adversarial machine learning” that explores the degree to which AI systems can be compromised by “contaminating” training data, by modifying algorithms, or by making subtle changes to an object that prevent it from being correctly identified (e.g., prosthetics that spoof facial recognition systems). The implementation of AI in cybersecurity systems that require a high degree of autonomy is also an area for further study. One recent example of work in this area is DARPA’s Cyber Grand Challenge that involved AI agents autonomously analyzing and countering cyber attacks.” https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/national_ai_rd_strategic_plan.pdf

The concerns for an adversary’s ability to compromise an AI/ML system are the focus of the C3E 2018 Cybersecurity Challenge Problem.

AI/ML Cyber Defensive 2018 Challenge Problem

The Challenge Problem is to address a series of specific questions about defending AI and ML vulnerabilities in multiple settings, including data centers, networks, cyber-physical systems or the Internet of Things (IoT).

Some suggested questions to be addressed include:

- What methods or techniques could be used to detect and mitigate attacks against Machine Learning?

- Given an implementation of AI/ML defense mechanism in one of the settings listed above, how would an adversary degrade that mechanism?

- With an understanding of the malicious actors’ approach to defeating the AI/ML defense mechanisms, how does the defender strengthen the AI/ML to resist attacks.

- How does a defender distinguish between a traditional cyber attack and an attempt to corrupt defensive or operational AI/ML?

- How can AI/ML be implemented to increase its resilience in a complex network such as a cyber-physical system or the IoT?

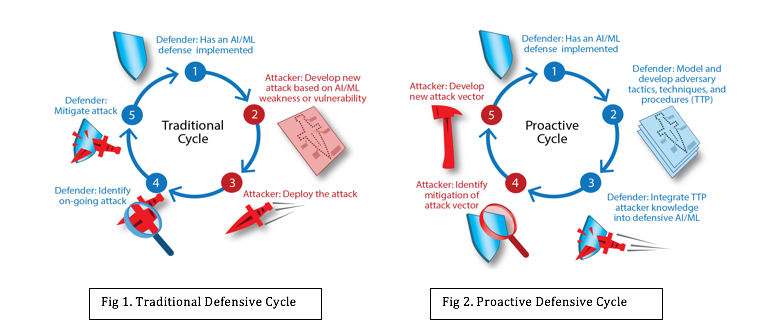

- How does the defender change the current model of “identify, mitigate, redeploy, and re-identify?” A solution that changes the paradigm from the traditional defensive model (Fig 1) to a more proactive defensive cycle (Fig 2), would reduce the likelihood of an attack and reduce the time from discovery to mitigation.

Note: A successful effort would address one or more of these questions.

Participants should provide one or more of the following demonstrations:

- Present a clear threat model addressing technical and behavioral elements. This may take a multidisciplinary approach.

- Present an implementation of an AI/ML/DS defensive model to detect and resist known and potential malicious software attacks.

- Develop an analytic process to anticipate and model adversary mechanisms to corrupt AI/ML/DS defenses.

- Identify software attack methods to defeat or confuse the AI/ML/DS defensive system.

- Identify approaches to strengthening defensive AI/ML/Ds to defeat the modified attack vectors that attempt to corrupt the defense mechanisms Develop measurement techniques to categorize traditional cyber attacks from attempts to poison the AI/ML/DS defense or mission systems.

- Identify methods to detect AML efforts to infer information about training sets, construct data points to be mishandled by ML algorithms, and/or extract information about model parameters.

- Identify methods for measuring the risk, consequences and mitigation of threats.

Research Datasets

A public access database is available for use in studying these issues: EMBER: An Open Dataset for Training Static PE Malware Machine Learning Models https://arxiv.org/abs/1804.04637

Other possible sources of AI/ML/DS research datasets include:

- https://github.com/ftramer/Steal-ML

- https://github.com/RandomAdversary/Awesome-AI-Security

- https://github.com/tensorflow/cleverhans

- https://github.com/rfeinman/detecting-adversarial-samples

- https://github.com/bethgelab/foolbox

Additional Comments

Each of the demonstrations should employ AI/ML/DS to enhance the defensive capabilities against a malicious actor’s attempts to circumvent these improvements.

What does each cycle learn that is implemented in the next cycle? How does the system improve the defensive posture? What might a malicious actor do to improve their threat vectors given improved defenses?

Do any of the models or behaviors from the 2016-2017 Ransomware Challenge apply here? Is there AI/ML/DS embedded in our Critical Infrastructure that a malicious actor could compromise and hold the operator hostage to extract ransom? The article by Martin Beltoc titled “Artificial Intelligence Can Drive Ransomware Attacks” dated September 21, 2017 in ISBuzz News provides some insights to consideration on this topic.

Potential Research Funding Support

For the 2017 C3E Workshop, NSF funded a small grant to stimulate research interest in Cybersecurity Challenge Problem. This year the C3E Support Team will once again apply for NSF funds to support the analytic research on the AI/ML/DS Challenge Problem for the 2018 C3E Workshop. Last year peer-reviewed recipients of small stipends were required to perform the research and to present their results on a Panel and Poster session at the C3E Workshop. In addition, a paper was required for inclusions in a peer-reviewed technical journal. The C3E Team anticipates a similar process for C3E 2018.

Persons interested in engaging in this research project should send a letter of interest requesting more information to the Co-PIs, Dan Wolf at dwolf@cyberpackventures.com and Don Goff at dgoff@cyberpackventures.com

References - Government Sources

“Artificial Intelligence for the American People,” Donald Trump, Whitehouse Fact Sheet, May 10, 2018, https://www.whitehouse.gov/briefings-statements/artificial-intelligence-american-people/

“Preparing for the Future of Artificial Intelligence,” Executive Office of the President, National Science and Technology Council Committee on Technology, October 2016, https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf

“The National Artificial Intelligence Research and Development Strategic Plan”, National Science and Technology Council, Networking and Information Technology Research and Development Subcommittee, October 2016, https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/national_ai_rd_strategic_plan.pdf

Additional references from technical publications, recent journals, and magazines.

See the following Science of Security webpage for additional list of recent articles that discuss machine learning (ML), artificial intelligence (AI), and decision support vulnerabilities: https://cps-vo.org/node/54662